Lisbon sits wedged between the Atlantic on the West and the river Tagus on the south. It’s buildings seemingly tanned to deep pastel shades, reflect a sun, which seems forever present. Whilst is it the history of the city, which appears to greet the visitor at first, whether it the Tower of Belem, or monastery, in recent years Lisbon has embraced change. Fancy buildings such as the MAAT (Museum of Art, Architecture and Technology – above) have sprung up, and tech startups have increasingly started to open Lisbon offices, such as muse.ai and Codacy. It is perhaps fitting that this year’s QuantMinds conference was held in Lisbon, the first year where it had adopted this new name, after over two decades under the banner of Global Derivatives. It seemed to be a major watershed year in terms of the conference, and not purely because of the name change. Of course the area of derivatives pricing was still fairly prominent, as it has been in previous years. However, other areas, notably around machine learning were also taking a large amount of the limelight. The broad takeaway was that machine learning was very much something many intuitions were researching, even if many of these projects were not yet at the production stage. These projects ranged from using machine learning in outlier detection for anomalous trades to improving FX execution.

From the past to the future in quantitative finance

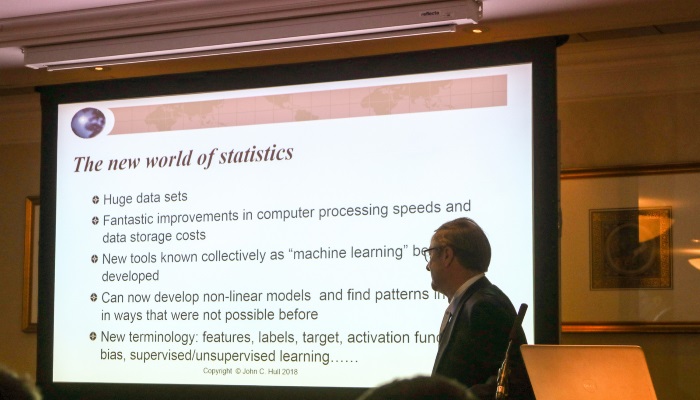

John Hull, known for his work in derivatives pricing, including the development of the Hull-White model for rates, and his ever popular book on derivatives (which I expect many readers have sitting on their bookshelves), was presenting on the subject of machine learning. Perhaps this is a sign of how quantitative finance is now changing. He explained that machine learning was now a skill being taught to his students. With huge data sets and much more processing computing power, it was possible to use machine learning to infer relationships which could potentially be non-linear. He illustrated this with several machine learning case studies which had been used to enable his studies to practically apply machine learning. These included an exercise to cluster countries according to their relative riskiness, using inputs such as economic data. He also showed a case study, which tried to answer the question of how implied vols change when the asset price changes. Lastly, he gave an example using data from Lending Club to try to classify those borrowers who were likely to default. He did however note problems with the initial dataset, namely that it didn’t include those people who were rejected from being given loans.

With all the talk of machine learning, it can be tempting to ignore the fact that simple models do work! Gerd Gigerenzer’s (Max Planck Institute) talk was on the use of simple heuristics to solve problems, rather than the use of overcomplicated models. It is often a key question, how simple or complex should our model be? It was important, Gigerenzer noted, to know the difference between risk, where we know the entire search space, and uncertainty, where we don’t know all the alternatives. It could be that in situations of uncertainty that simple heuristics could do better than much more complicated areas. Heuristics might be second best in “risk” areas (such as a game like Go), but these were unlike the real world. He illustrated the point with the gaze heuristic which we intuitively use when we try to catch a bar. If we take the case of allocation in a portfolio, he suggested that equal weighting was often better than using a Markowitz approach.

Emanuel Derman’s talk was entitled “Derivatives, the past and the future of quantitative finance”. He described Spinoza’s approach to describe emotions as derivation of three specific one: desire, pleasure and pain. The concept of using replication was a powerful valuation tool. If you don’t know how to value an asset, replicate it with assets which can valued. He later discussed Markowitz’s work, which in a parallel way tried to define risk in terms of reward. Could we think of CAPM as a way of replicating return from risk? In 1973, Black-Scholes put together the ideas of diffusion, vol and replication. You can realistically hedge an option with its stock, between the correlation is really close to 1. They of course assumed that vol is unchanging… because the model doesn’t really work, trading volatility could now be done. Volatility thus became an asset class. In a sense, over the years, it has become a case where we are trading parameters more broadly. It has become easier for unskilled crowds to do this, because there is not need to replicate these instruments yourself, such as CDS or VIX derivatives. Of course, this accessibility could have a downside. We have of course seen this with XIV recently, which wiped out investors returns. For the future, Derman noted how change was coming with a shift from derivatives modelling to modelling underliers, and towards market microstructure, creating microscoptic models of market participants.

Market microstructure and execution

In recent years, themes around market microstructure have increasingly become a key element of the conference. This year was no exception. Rama Cont (Imperial College) presented work on using deep learning to understand high frequency data. His first presentation was on using deep learning to understand price formation on a dataset consisting of 5 years NASDAQ order book data. We can think of price formation as being the function both of the price and also order data. Does such a function exist, and if so can we estimate it? Whilst we have simpler statistical models, maybe an machine learning could help, in particular the use of deep learning. He said the main argument against deep learning was that it needed to use a lot of data. Rather than attempting to train it on a stock by stock basis, he suggested instead using a massive training set of all the stocks to create a universal model to avoid this. We could look at this as a classification problem where we have the state of the order book and features learned from past order flow. He noted that the universal model beat a single stock model for the vast majority of cases out-of-sample and typically did better than a linear model. The performance of the model also seemed stable even for periods where the test set was further in time from the training set, which seems to contradict the general idea, that it is better to use more recent data for training a market based model. This suggests that there was a ‘long memory’ in price formation.

Later, he also presented a different study on market microstructure, but this time on the idea of market impact. The square root law in market impact is a well known result, linking market impact with the notional size. He suggested that when considering the price impact of a trade, we should consider the aggregate order flow, not simply our own. There was also the question of the participation rate, how large are our trades compared to the overall market transactions.. Furthermore, we need to consider the direction of a trade. This seems intuitive. If we are buying, and everyone is selling, it would suggest that market impact would be more favourable toward us. He conjectured that the reason why the square root law was something which was often observed, was because trade duration was correlated to size. He concluded by suggesting that when conditioning on trade duration the price change accompanying a trade has very little dependence on the trade size. There was ultimately only one source of risk, namely volatility.

When traders are executing a trade, they are faced with many questions. Which broker should I use? Which algorithm is most appropriate? Should we have a shorter order duration? For example, if the market is trending higher, and we are buying, we would be more inclined to front load our execution. Market makers need to understand what types of positions will come their way and also how to adjust their spreads. Patrick Karlsson (SEB) presented an approach to this using a combination of deep learning and reinforcement learning. The Q learning element of the problem involved creating an agent base environment for trading, where the rewards were finishing an execution earlier and a better P&L. Conversely penalties applied were too much market impact and executing too slowly. Features included price, volume and trades executed. The key results were an improvement in execution costs compared to a TWAP benchmark. The aggregator learnt not to execute on all trading venues at the same time and that some liquidity providers tend to aggressively hedge their risk more than others. It also learnt to execute more aggressively in trending environments.

Michael Steliaros (Goldman Sachs) also discussed the theme of optimising your execution, in the context of a large portfolio of stocks. Managing transaction costs were becoming ever more important. He noted that it was important to understand the correlations within a portfolio when executing. At a portfolio level correlations might impact risk. Intraday microstructure exhibits significant variability he noted, in terms of intraday correlation, volatility and volume. There were typically higher correlations towards the end of the day.

Stefano Pasquale (BlackRock) spoke about developing a framework for liquidity risk management. The ideas was to use machine learning to understand the best way to do portfolio liquidity optimisation. When would risks of redemption rise, which could cause pressures on liquidity? As part of this it was also important to model transaction costs, and to understand the time it would take to trade a position. What was the best way to aggregate a portfolio? He discussed more generally the issues with machine learning. It might be the case that we can have a lot of data, but this could be very noisy. He cited specific areas such as a trying to forecast the volume traded of infrequently traded assets. He suggested a machine learning approach could be better than typical approaches such as Kalman or GARCH. Typically this quantity was very non-linear. It was possible to enhance this volume data, by adding data on trading axes. When traders would dispute model generated prices, they would be asked to fill in text to explain why. This data could be parsed using NLP to extract features to improve model prices.

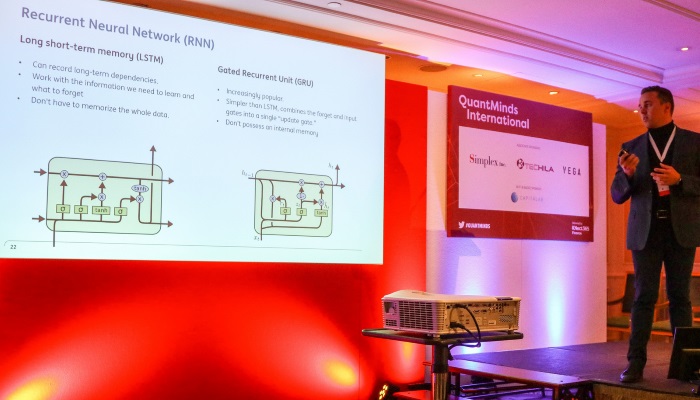

However, machine learning was not purely applied to execution or understanding price formation. Alexander Giese (UniCredit) described how it could be used to identify anomalous trades, typically to flag “fat finger” style trades. It could also be used to identify unusual trading behaviour, such as a trader trading an asset which they had never traded before. He approached the problem from a supervised learning perspective, using a training set of around 48,000 trades, with 2,000 trades being a validation set. The data had to “oversampled” because typically there was a large imbalance in the training set (ie. there were very few anomalous trades compared to normal trades). Matthew Dixon gave a talk in a similar area, trying to predict rare events with LSTMs (long short term machines). Anomaly classification could include HFT price flips, which were typically a rare event, when using very high frequency data. Again, he noted the importance of oversampling, and doing it in such a way to preserve the properties of a time series. He showed that his approach improved the prediction of “rare” events, but not so much the “common” events. When measuring the accuracy of such a prediction of imbalanced data it was important to use something like an FI score.

Python, AI and Big Data

Python was a big topic at the event. Paul Bilokon (Thalesians) spoke about his Python based Thalesians TSA (time series analysis library) alongside an interactive demo. He showed how it was possible to use the library to quickly create stochastic processes with just a few lines of code either based on simulated data or real data. He also demonstrated how it could be used for various filtering techniques such as Kalman filters. Furthermore, it was possible to parallelise many of the computations, porting to the IPython parallel cluster. He also showed how it was possible to have interactive graphics which could would be updated as the algorithms were being updated. The library is currently available on GitHub here. Paul also later spoke on the links between AI, logic and probability, showing that in practice they were very closely linked, and wrapping into it some of the research work he did on his PhD in the area of domain theory. I also spoke about Python alongside other subjects, using machine readable news to trade FX, as well as presenting another use case for large datasets in FX, namely transaction cost analysis. My Cuemacro colleague, Shih-Hau Tan, presented work on using high performance tools in Python to speed up code, without having to rewrite it extensively. Obviously, if we are prepared to rewrite code totally for optimal speed, we are likely to just use C++! He benchmarked various tools such as Dask and Numba when doing time series computations, which are typically done using pandas in Python. He showed how in many cases Dask did offer a speedup, with relatively minimal changes in the code.

There was also a discussion on the evolving nature of what a quant does from the team at Beacon and Simplex, who are creating a Python based quant platform, which included Beacon’s COO, Mark Higgins. The discussion noted how the quant skillset has evolved over the years. In many cases, quants were moving towards the business and into areas such as algo trading. With spreads tightening, it was more difficult to make money and hence it was necessary to be smarter when trying to manage risk. Computations such as CVA and XVA are very computationally intensive, and often requires the use of specialised hardware, such as GPUs. GPUs had made bigger in roads than FPGAs, because of higher level tools such as Cuda. Open source was making things easier, so it is no longer necessary to reinvent the wheel. Furthermore, cloud technology was making massive computer power more accessible. They also presented some systems which could be used which used a full stack of different underlying technologies, including the case of presenting analysis to a client, in an intuitive way.

Marcos Lopez de Prado (Cornell) discussed some of the pitfalls of why machine learning didn’t always work in the context of a fund. One problem was the silo mentality. This might be appropriate for discretionary fund managers. However, for quant based approaches, often it required a large element of teamwork. Furthermore, he suggested that when looking at time series data, often simply resampling chronologically often resulted in losing information. It was better to resample based on information content, such as based on the dollar value traded. Also when initially processing market data, we need to do so in a way such that it doesn’t lose its memory, which is a big part of understanding how to predict time series in the future. He also discussed the pitfalls associated with backtesting. If you do enough backtests, you will inevitably come up with a “good” strategy.

Jan Novotny (HSBC) talked about using KDB for machine learning, and explained why KDB is very fast for dealing with columnar datasets. In finance, the most common dataset of this sort is of course time series data. Rather than moving data around (often in chunks), from a database outside to do analysis, it was possible to do a large amount of analysis within KDB itself, so the code lives next to the data. He explained the basic principles of the q language used within KDB.

Smart beta and risk

Nick Baltas

Smart beta is always a popular topic at the conference. This year was no exception. Nick Baltas (Goldman Sachs) talked about the impact of crowding on systematic ARP (alternative risk premium strategies), a topic which was getting a lot more interest from market participants. He noted we could think of two types of ARP strategies, the first based on risk sharing/premia (for example carry) and second based on price anomalies/behaviourally driven (like trend). The first tended to be subject to negative feedback loops, whereas the second tended to have positive feedback loops. It was tough to measure “crowdedness”. He noted that crowding’s impact was dependent on whether vol was rising or falling. Vol tended to be useful for momentum. By contrast, you probably wouldn’t want to vol target a carry strategy. Staying on the theme of machine learning, Artur Sepp (Julius Baer) discussed using machine learning to improve forecasts for volatility when doing trading volatility itself, typically, when trying to monetise the volatility risk premium (the difference between implied and realised volatility). Damiano Brigo (Imperial College) modelled the behaviour of rogue traders using utility functions. The key question was to examine ways of stopping traders taking too much risk. He modelled their behaviour using an S shaped utility curve. Unlike a bank, a trader ultimately has a liability (basically getting fired). He showed that risk constraints such as expected shortfall (or VaR) essentially didn’t affect such tail seeking behaviour.

Blockchain, crypto and security

There was of course discussion over blockchain and cryptocurrencies, as you might expect, as well as the future of banking. Alexander Lipton (Stronghold Labs) discussed the changing landscape for banks. At its simplest level a bank keeps a ledger and lends money. They need a large pool of reliable borrowers and attract depositors. He talked about how banking had evolved digitally, starting with 1980s online banking, for example through Minitel. Then you had the wave of digital hybrid banks. Now we are seeing digitally native banks. Banks need to understand how to best optimise their balance sheets. He talked about the various flavours of blockchain. There was also the question of what use cases to apply. Does everyone need to write to a blockchain? However, he noted that in many cases these are just at a proof of concept stage. He noted the fundamental difference between banks and bitcoin. Banks essentially create money from thin air. With bitcoin, transactions are however not based on credit. He also delved into the area of central bank issued digital cash. Such a concept, could reduce the lower bound for negative interest rates. At present, there is effectively a floor, given the presence of cash. Whilst, it might improve things like tax collection, it would not have the same anonymity properties associated with cash. Paul Edge (EDP) discussed the concept of stable coins, such as Tether, which aimed to solve the problem with the huge amount of volatility associated with cryptocurrencies. Obviously, one big issue which I can see is that they can end up storing up the volatility for another time, in a similar way to the way that FX pegs, might appear stable, until there’s a large loss of confidence.

In a somewhat different talk, a security expert, FreakyClown, discussed some of the precautions which were important for businesses to take. He noted that very often simple measures such as locking your computer screen were not done. He also suggested that designing your external perimeter was often not done correctly, with fences and doors being designed inappropriately. Entry points had to be secure. Yes, large windows might be attractive, but it was important to note that as easy as it was to look out, others could look in at our sensitive information on screens.

Conclusion

The conference proved to be quite different to previous years. Yes, option pricing was still a feature of the conference, as you would expect. However, it was very noticeable how machine learning and other data oriented talks were becoming a key part of the conference. This evolution seems natural. After all, a quant’s role within banking has expanded over the years. Quants are no longer mostly concentrated within derivatives pricing, we are now in many different areas, whether it is in the development of trading strategies or to better understand risks within financial institutions. It’s certainly an exciting time to be a quant, both within financial services and potentially also in areas outside of finance.

This article was kindly sponsored by QuantMinds. If you would like view all the photos from the event click here.